March 4, 2025

What Is Chain of Thought Prompting? Understanding This AI Technique

Chain of thought (CoT) prompting is unique compared to other prompting methods. Here’s the main difference. Traditional prompting methods act with a similar approach: Ask a question, receive an answer. This is how we understand AI to work. However, this approach fails when the model is asked to undertake a complex task. The direct approach has its merits, but it is limited.

Chain of thought prompting takes a different approach, requiring the model to break down its process like you would during an Arithmetic test. While breaking it down, the model explains how it took each step to conclude. It allows for users to understand and for the model itself to be accurate.

This guide will explain more about CoT prompting and its relevance to Artificial Intelligence.

What Is Chain of Thought Prompting?

As the name suggests, a Chain of Thought prompts the model to express its answers like a chain, with each part building on top of the other. Rather than give a direct response, the model explains the answer, and by doing so, it can detect and avoid mistakes. CoT is ideal for complex tasks, including:

- Analyzing nuances in a message and the context to give an appropriate response. This is called sentiment analysis.

- Solve mathematical problems (remember our allusion to arithmetic tests?)

- Carry out some logical and common sense reasoning, which needs some steps.

- Provide a solution to a technical problem like a bug.

Chain of Thought Prompting Examples

The best way to understand the difference between regular and CoT prompting is through examples, so let us compare, shall we?

Example 1: Mathematical Problem-Solving

Regular Prompt:

Q: If a train travels 120 miles in 3 hours, what is its average speed?

A: 40 miles per hour.

CoT Prompt:

Q: If a train travels 120 miles in 3 hours, what is its average speed? Let’s solve this step by step.

A:

- Speed is calculated using the formula: Speed = Distance ÷ Time.

- The total distance traveled is 120 miles.

- The total time taken is 3 hours.

- Performing the division: 120 ÷ 3 = 40.

- Final answer: 40 miles per hour.

Example 2: Logical Deduction

Regular Prompt:

Q: If all roses are flowers and some flowers fade quickly, does it mean all roses fade quickly?

A: No.

CoT Prompt:

Q: If all roses are flowers and some flowers fade quickly, does it mean all roses fade quickly? Let’s analyze it step by step.

A:

- Step 1: We know that all roses are flowers.

- Step 2: We also know that some (but not all) flowers fade quickly.

- Step 3: Since “some” does not mean “all,” we cannot conclude that all roses fade quickly.

- Final conclusion: No, the statement is incorrect. Some flowers fade quickly, but that does not necessarily apply to all roses.

Example 3: Commonsense Reasoning

Regular Prompt:

Q: A man has a broken umbrella, and it starts raining. Will he stay dry?

A: No.

CoT Prompt:

Q: A man has a broken umbrella, and it starts raining. Will he stay dry? Let’s break it down.

A:

- Step 1: A functional umbrella provides protection from the rain.

- Step 2: The umbrella, in this case, is broken, meaning it likely has holes or does not fully open.

- Step 3: If the umbrella cannot properly shield him, he will likely get wet.

- Final answer: No, he will not stay dry.

Example 4: Sentiment Analysis

Regular Prompt:

Q: What is the sentiment of this review? “The restaurant had great food, but the service was incredibly slow and frustrating.”

A: Negative.

CoT Prompt:

Q: What is the sentiment of this review? Let’s analyze it step by step.

A:

- Step 1: The review mentions that the food was “great,” which is positive.

- Step 2: However, it also states that the service was “incredibly slow and frustrating,” which is negative.

- Step 3: Since the negative aspects focus on service, which impacts the overall experience, the sentiment leans toward mixed to slightly negative.

- Final conclusion: Mixed sentiment (slightly negative).

Example 5: Coding & Debugging

Regular Prompt:

Q: What is wrong with this Python function?

def divide_numbers(a, b):

return a / b

A: Division by zero error possible.

CoT Prompt:

Q: What is wrong with this Python function? Let’s analyze it step by step.

A:

- Step 1: The function takes two parameters, a and b, and returns a / b.

- Step 2: There is no validation for b, meaning if b = 0, the function will throw a ZeroDivisionError.

- Step 3: To fix this, we need to check if b == 0 and handle the error gracefully.

- Fixed Code: def divide_numbers(a, b): if b == 0: return “Error: Division by zero is not allowed.” return a / b

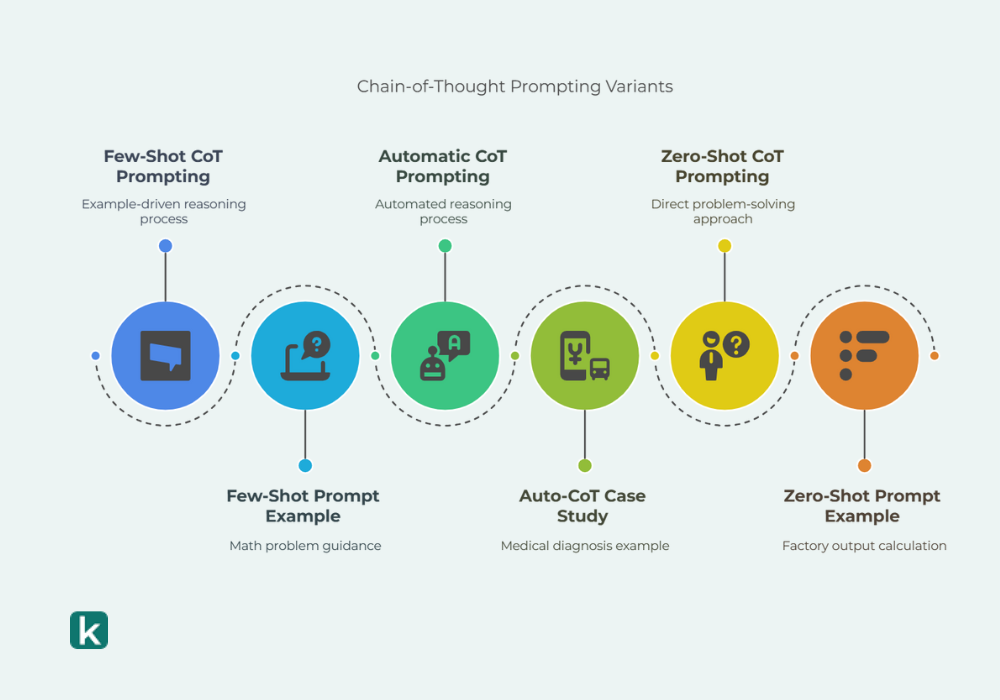

Chain-of-Thought Prompting Variants

Under Chain of Thought Prompting, there are different ways the prompt can be stated. Here are some variants:

1. Few-Shot CoT Prompting

To use the few-shot variant, you need an example of your desired reasoning process. You provide this example to the model as a guide, and it follows your guide. Few Shot ensures that the model aligns with your preference right from the start.

For instance, here’s what a few shot prompt would be like:

Prompt:

“Here are examples of solving math problems step by step. Follow the same method to solve the next one.”

Examples:

Q: What is 14 × 12?

A: First, break it down:

14 × 12 = (14 × 10) + (14 × 2)

14 × 10 = 140

14 × 2 = 28

140 + 28 = 168

Now your turn:

Q: What is 18 × 15?

2. Automatic Chain of Thought Prompting (Auto-CoT)

For Automatic Chain of Thought Prompting, you don’t provide a reasoning guide. Instead, the prompt automatically generates its process like a human . Because of its structured approach, Auto CoT is often used in the fields of science and law. If the user is conducting research, making a diagnosis, or analyzing legal documents, Auto CoT is the preferred option.

This variant performs its tasks using machine learning algorithms and Language Learning Models (LLM). Because of the training involved, there is a lower chance of errors.

Here’s a practical case study for a model trained with Auto CoT:

Q: If a patient has symptoms X, Y, and Z, what could be the possible diagnosis?

A:

- Step 1: Identify diseases associated with each symptom.

- Step 2: Cross-check overlapping symptoms to rule out unlikely conditions.

- Step 3: Compare against medical knowledge to determine the most probable diagnosis.

- Final Answer: Condition A or B (requires further testing for confirmation).

3. Zero-Shot CoT Prompting

Zero-shot CoT prompting is the direct opposite of the few-shot variant. Rather than providing an older guide, the user uses this prompt to instruct the model to reason through the problem directly. Previous examples are not always available, hence the need for this variant.

How does it play out in practice?

Prompt:

“Solve the following problem step by step.”

Q: A factory produces 250 items per day. How many items are produced in a week?

A:

- There are 7 days in a week.

- The factory produces 250 items each day.

- Multiplying: 250 × 7 = 1,750 items per week.

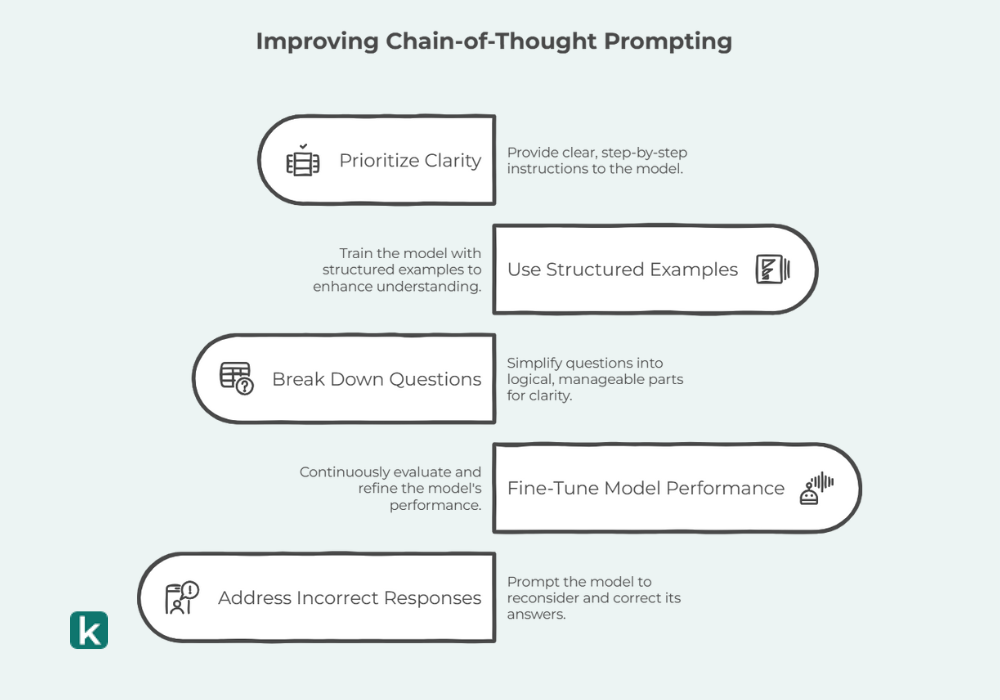

How Do We Improve Chain-of-Thought Prompting?

Chain of Thought Prompting has numerous benefits over its traditional counterparts, but it is not perfect. You may face challenges like the need for clarity. Therefore, here are some ways to improve on CoT:

1. Prioritize Clarity

To improve on CoT, start by giving clear instructions. This approach relies on structured reasoning, so the model must understand what you want. Also, because many models are more accustomed to traditional prompts, you must be clear that you want a breakdown of steps.

For instance, tell the model to “explain your reasoning” or “solve step by step.” Avoid open-ended questions, as they can prompt direct answers. “What is 200 x 45?” is not as effective as “Solve 200 x 45 step by step.”

2. Use Structured Examples

Your model will give better CoT responses if it is trained, and you do this through examples. The more you feed the model with examples, the more it recognizes the approach you desire and adapts to it. Technical tasks like coding and math require this training even more.

Example:

Q: How does photosynthesis work?

❌ “Plants convert sunlight into energy.” (Oversimplified)

✅ “Step 1: Plants absorb sunlight using chlorophyll.

Step 2: Light energy converts carbon dioxide and water into glucose and oxygen.

Step 3: The plant uses glucose for energy and releases oxygen into the air.”

3. Do some Breaking Down Yourself

Even in human conversations, if Person A asks a broad question, Person B would likely give a vague response. Answers depend a lot on the quality of questions asked. Therefore, with AI models, break your questions in logical answers to make CoT effective.

Example:

❌ “Explain how a car engine works.” (Too broad)

✅ “Break down the process of how fuel powers a car engine.” (Encourages step-by-step reasoning)

4. Fine-Tune Model Performance

Review your model’s performance to ensure it maintains proficient results. It should also be continually trained on CoT data to remain refined. This is especially needed for domains like finance and scientific research. For example, if you continually train your model on legal documents, it’ll give better, specific responses.

5. When Incorrect, have the Model Repeat the Response

AI models have flaws, so even with a good Chain of thought prompting, you may not get what you want. When faced with an error, give an additional prompt like “Can you break this down further?” and “Think again; does this answer make sense?”

Example:

Q: What is 17 × 24?

A: 400. (Incorrect)

Q: Think again, does this answer make sense? Solve it step by step.

A:

- 17 × 24 = (17 × 20) + (17 × 4)

- 17 × 20 = 340

- 17 × 4 = 68

- 340 + 68 = 408

Frequently Asked Questions

What Is Chain-of-Thought Prompting?

Chain-of-thought (CoT) prompting is a technique that encourages language models to break down complex problems into step-by-step reasoning, improving accuracy and transparency in responses.

How Does Chain-of-Thought Prompting Improve AI responses?

By explicitly articulating intermediate reasoning steps, CoT prompting helps models perform better on tasks requiring logical reasoning, such as arithmetic, commonsense reasoning, and sentiment analysis.

What Are the Key Differences Between CoT and Standard Prompting?

Standard prompting provides direct answers without breaking down the thought process, while CoT prompting encourages models to explain their reasoning step by step, leading to more reliable and interpretable results.

Can Chain-of-Thought Prompting be Applied to All AI tasks?

While CoT prompting is highly effective for reasoning-based tasks, it may not always be necessary for simple fact-based queries where direct answers suffice.

Conclusion

Chain-of-thought prompting represents a major leap in prompt engineering by significantly improving reasoning and problem-solving capabilities.

Whether for arithmetic, commonsense reasoning, or sentiment analysis, CoT enhances model outputs by making their thought process explicit. As AI continues to evolve, refining CoT techniques will play a vital role in making LLMs more reliable and interpretable.